Getting Started with robotis_lab

Overview

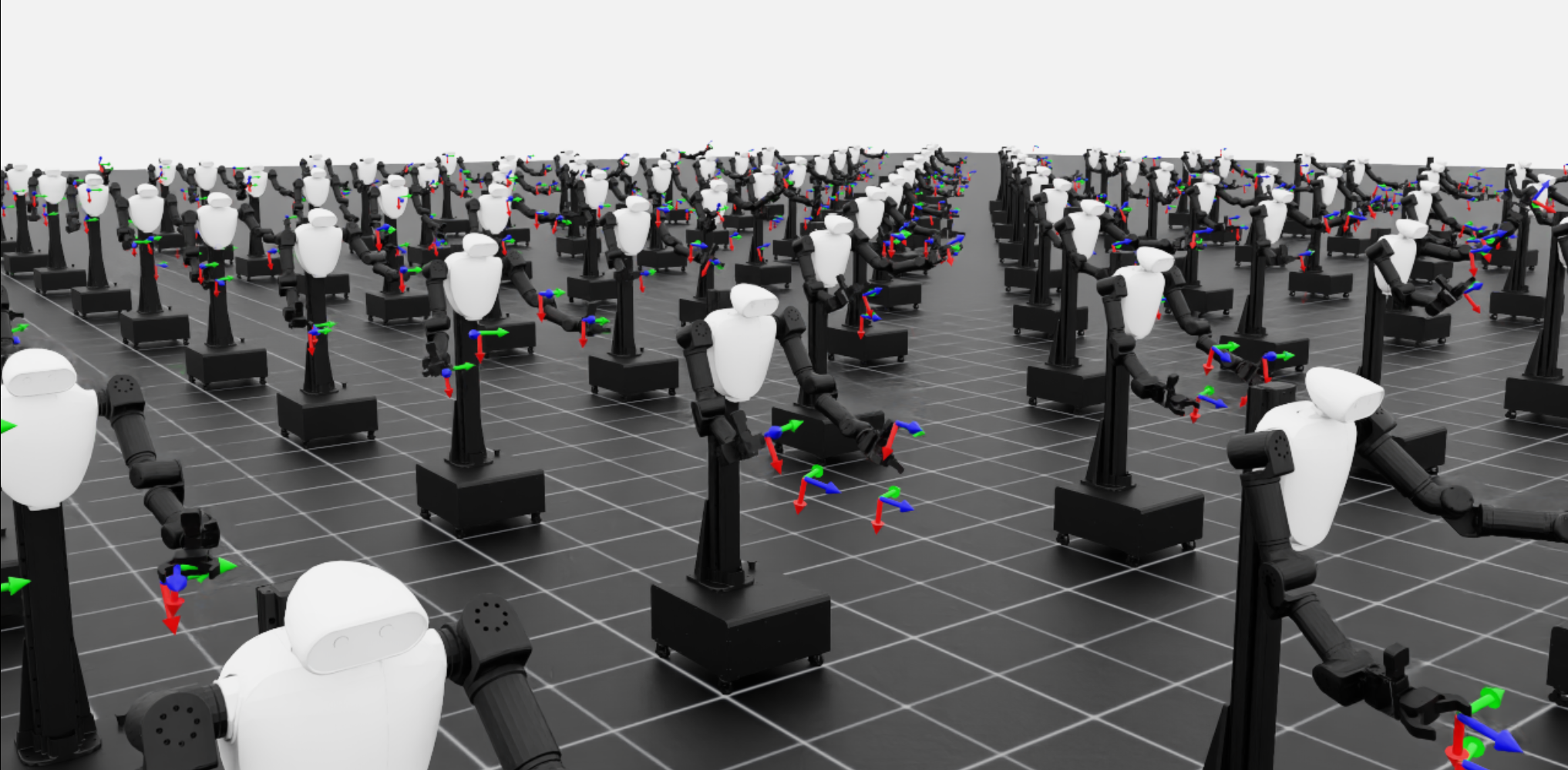

robotis_lab is a research-oriented repository based on Isaac Lab, designed to enable reinforcement learning (RL) and imitation learning (IL) experiments using Robotis robots in simulation. This project provides simulation environments, configuration tools, and task definitions tailored for Robotis hardware, leveraging NVIDIA Isaac Sim’s powerful GPU-accelerated physics engine and Isaac Lab’s modular RL pipeline.

INFO

This repository currently depends on IsaacLab v2.0.0 or higher.

Installation

Follow the Isaac Lab installation guide to set up the environment.

Instead of the recommended local installation, we installed and ran Isaac Lab within a Docker container environment to simplify dependency management and ensure consistency across systems.Clone the Isaac lab Repository:

git clone https://github.com/isaac-sim/IsaacLab.git- Start and enter the Docker container:

# start

./IsaacLab/docker/container.py start base

# enter

./IsaacLab/docker/container.py enter base- Clone the robotis_lab repository (outside the IsaacLab directory):

cd /workspace && git clone https://github.com/ROBOTIS-GIT/robotis_lab.git- Install the robotis_lab Package

cd robotis_lab

python -m pip install -e source/robotis_lab- Verify that the extension is correctly installed by listing all available environments:

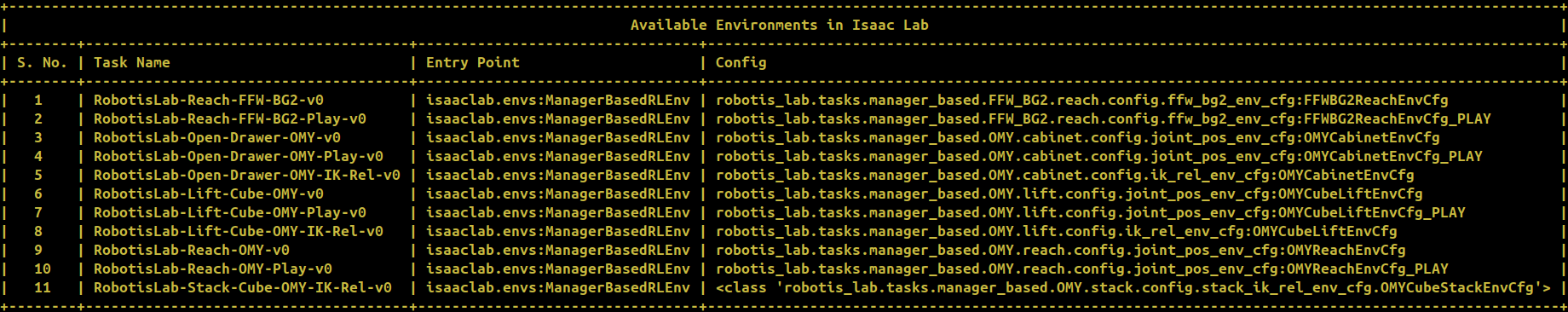

python scripts/tools/list_envs.pyOnce the installation is complete, the available training tasks will be displayed as shown below:

Try examples

Reinforcement learning

FFW-BG2 Reach task

# Train

python scripts/reinforcement_learning/skrl/train.py --task RobotisLab-Reach-FFW-BG2-v0 --num_envs=512 --headless

# Play

python scripts/reinforcement_learning/skrl/play.py --task RobotisLab-Reach-FFW-BG2-v0 --num_envs=16