Physical AI with Imitation Learning

Learn from human demonstrations and adapt to real-world changes through continuous interaction and intelligence.

Learn from humans, perform like experts.

Progressing toward advanced Physical AI through a scalable research lineup

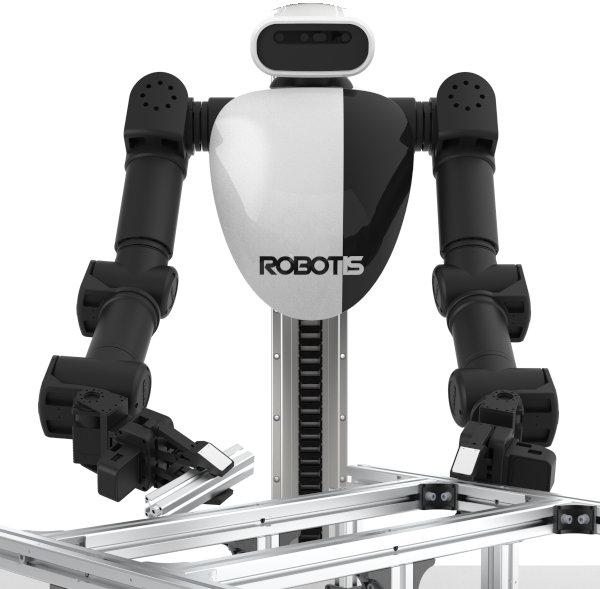

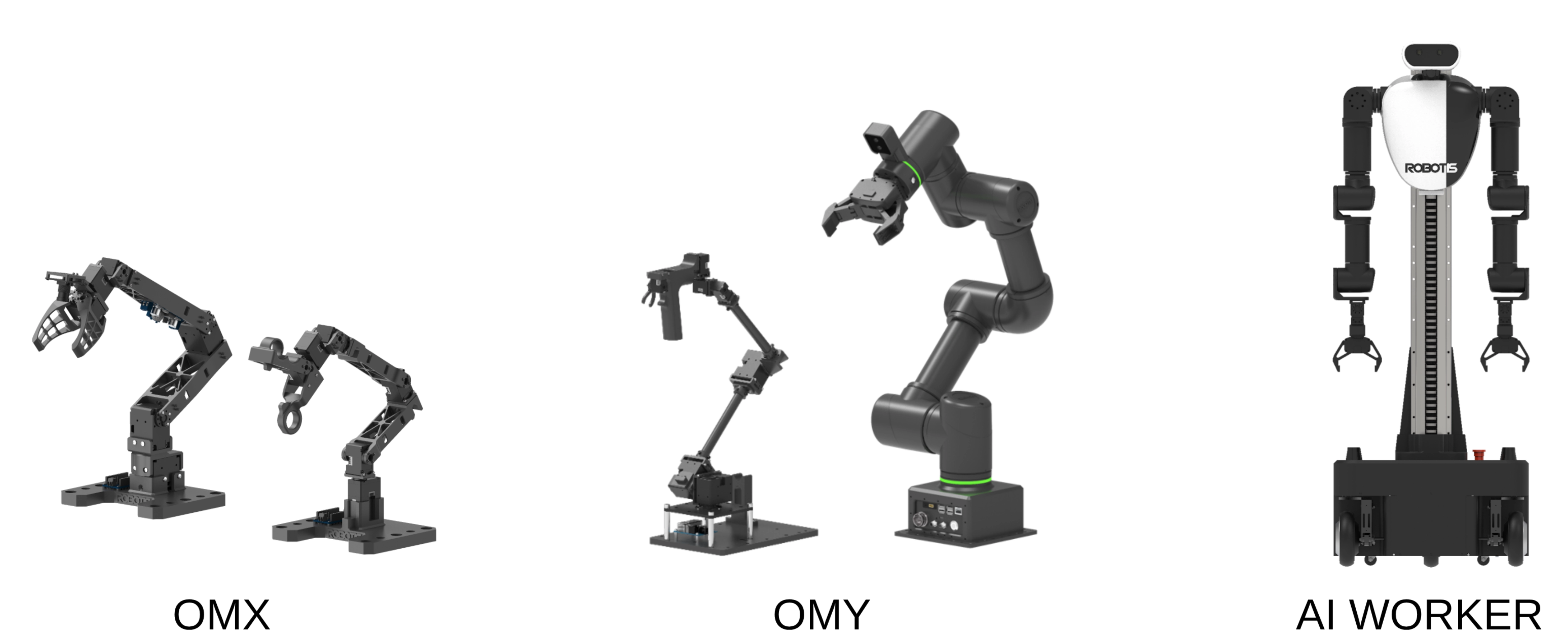

The ROBOTIS Physical AI Lineup consists of three scalable levels of research-focused robots:

Level 3 (Enterprise): AI Worker: Semi-humanoid robot systemsLevel 2 (Middle): OMY: Advanced AI ManipulatorsLevel 1 (Entry): OMX: Cost-effective AI ManipulatorsEach level supports a progressive research journey in Physical AI. It begins with basic motion learning and continues through full-body imitation to autonomous operation.

(19 DOF ~ 25 DOF robot body)(6 DOF robot arm + gripper)(5 DOF robot arm + gripper)At ROBOTIS, our vision for Physical AI is to solve real-world industrial and societal problems that traditional, rule-based systems cannot. We believe that true intelligence emerges when robots learn from humans, adapt to dynamic environments, and perform safely and autonomously in the physical world. Through our scalable lineup from entry-level manipulators to full-body robots,

we aim to:

By embedding intelligence into physical systems, we take a step closer to a future where robots collaborate with people, extend human capabilities, and bring freedom from repetitive or dangerous labor.

Physical AI refers to artificial intelligence that learns and acts through real-world physical interaction using robotic bodies.

Unlike traditional AI, which operates purely in simulation or digital environments, Physical AI:

This approach enables learning that is grounded in reality — shaped by friction, gravity, uncertainty, and the complexity of the physical world.

Physical AI allows us to:

By embedding intelligence into physical systems, we open the door to AI that can not only understand the world, but also act in it safely and effectively.