Model Inference with Web UI

Once your model is trained, you can deploy it on the OMY for inference.

Model Deployment and Inference

1. Setup Physical AI Tools Docker Container

WARNING

If the Physical AI Tools is already set up, you can skip this step.

If you haven't set up the Physical AI Tools Docker container, please refer to the link below for setup instructions.

Setup Physical AI Tools Docker Container

2. Prepare Your Model

Choose one of the following methods.

Option 1) Download your model from Hugging Face

You can download a policy model from Hugging Face. Detailed steps are provided below

You can proceed to the next step: 👉 3. Bring up OMY Follower Node

Option 2) Manually copy your model to the target directory

Please place your trained model in the following directory:

USER PC

<your_workspace>/physical_ai_tools/lerobot/outputs/train/

Models trained using Physical AI Tools are automatically saved to that path. However, if you downloaded the model from a hub (without using Physical AI Tools) or copied it from another PC, you need to move the model to that location.

Available Folder Structures

Please refer to the folder structure tree below:

The following folder structures are all valid (example_model_folder_1, 2, 3).

<your_workspace>/physical_ai_tools/lerobot/outputs/train/

├── example_model_folder_1

│ └── checkpoints/

│ ├── 000250/

│ │ ├── pretrained_model/

│ │ │ ├── config.json

│ │ │ ├── model.safetensors

│ │ │ └── train_config.json

│ │ └── training_state

│ │ ├── optimizer_param_groups.json

│ │ ├── optimizer_state.safetensors

│ │ ├── rng_state.safetensors

│ │ └── training_step.json

│ └── 000500/

│ ├── pretrained_model/

| │ └─ (...)

│ └── training_state/

| └─ (...)

├── example_model_folder_2/

│ ├── pretrained_model/

| | └─ (...)

│ └── training_state

| └─ (...)

└── example_model_folder_3/

├── config.json

├── model.safetensors

└── train_config.jsonINFO

After placing the model in the above directory, you can access it from within the Docker container at:

/root/ros2_ws/src/physical_ai_tools/lerobot/outputs/train/

3. Bring up OMY Follower Node

WARNING

The robot will start moving when you run bringup. Please be careful.

ROBOT PC

cd /data/docker/open_manipulator/docker && ./container.sh enterROBOT PC 🐋 OPEN MANIPULATOR

ros2 launch open_manipulator_bringup omy_f3m_follower_ai.launch.py ros2_control_type:=omy_f3m_smooth4. Run Inference

a. Launch Physical AI Server

WARNING

If the Physical AI Server is already running, you can skip this step.

Go to physical_ai_tools/docker directory:

USER PC

cd physical_ai_tools/dockerEnter the Physical AI Tools Docker container:

./container.sh enterThen, launch the Physical AI Server with the following command:

USER PC 🐋 PHYSICAL AI TOOLS

ai_serverb. Open the Web UI

Open your web browser and navigate the Web UI (Physical AI Manager).

(Refer to the Dataset Preparation > Recording > 1. Open the Web UI)

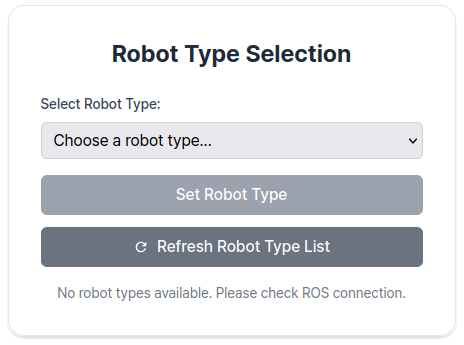

On the Home page, select the type of robot you are using.

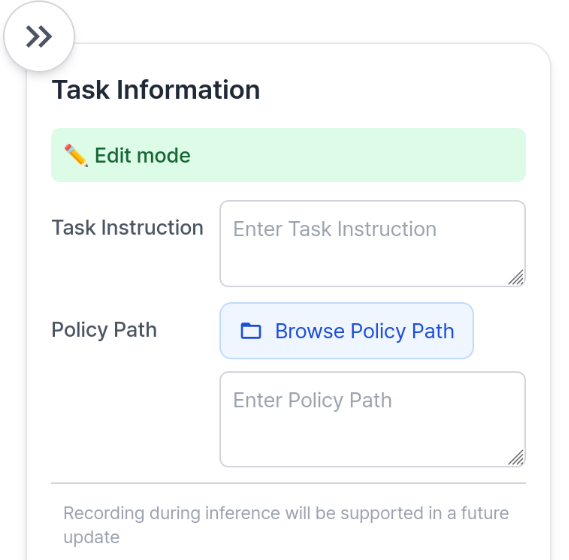

c. Enter Task Instruction and Policy Path

Go to the Inference Page.

Enter Task Instruction and Policy Path in the Task Info Panel, located on the right side of the page.

- Task Information Field Descriptions

| Item | Description |

|---|---|

| Task Instruction | A sentence that tells the robot what action to perform, such as "pick and place object". |

Policy Path 🐋 PHYSICAL AI TOOLS | The absolute path to your trained model directory inside the Docker container(🐋 PHYSICAL AI TOOLS). This should point to the folder containing your trained model files such as config.json, model.safetensors, and train_config.json. See the Policy Path Example below for reference. |

| FPS | FPS should be set to the same FPS at which you collected the training data for your model, which serves as the control frequency. |

TIP

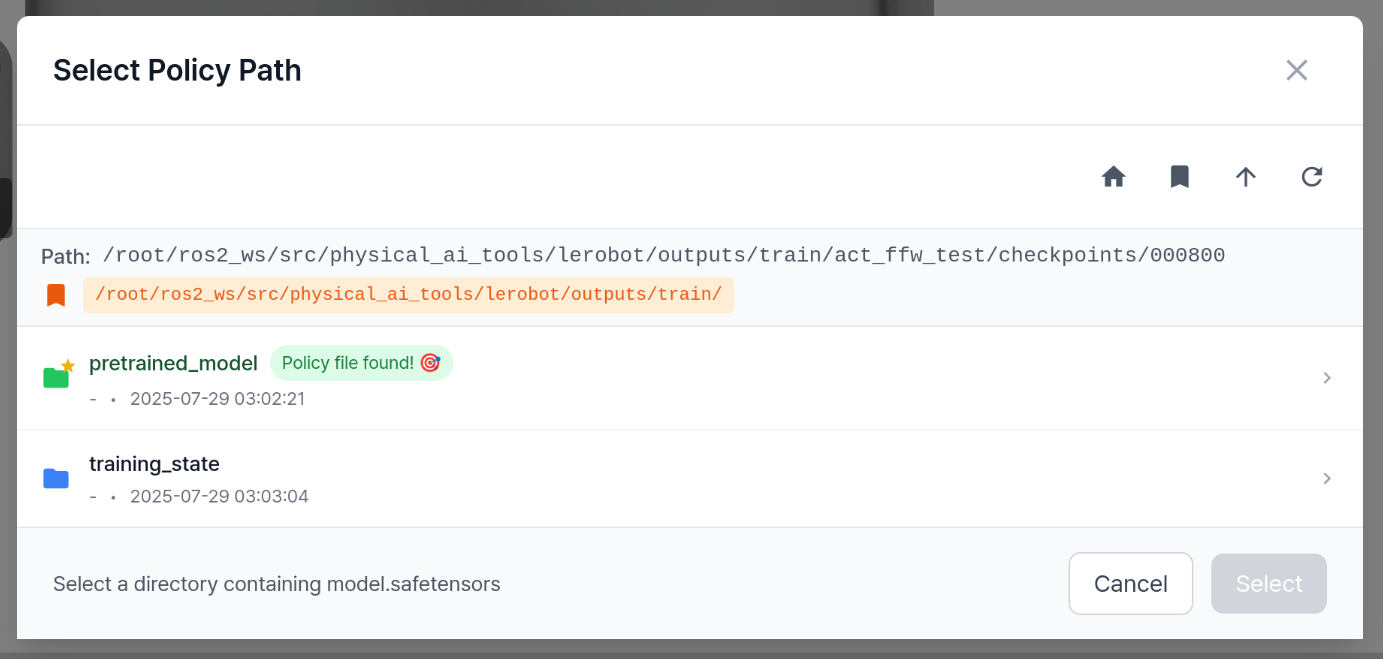

Entering Policy Path

You can either click the Browse Policy Path button to select the desired model folder, or directly enter the desired path in the text input field.

TIP

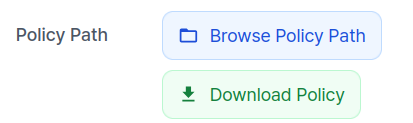

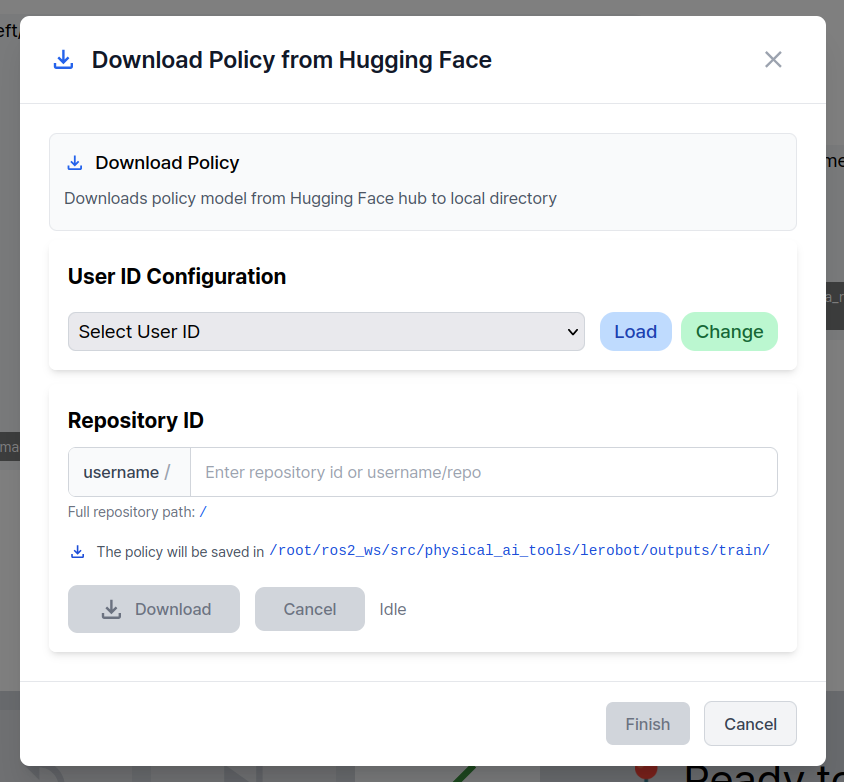

Download Policy Model from Hugging Face

You can download your model from Hugging Face.

Click the Download Policy button to open a popup for downloading a policy model.

Select the Hugging Face User ID and enter the repository to download.

Click the Download button to start the download. A progress indicator will be displayed.

When the download completes, click Finish to close the popup.

The downloaded model path is automatically filled into Policy Path.

💡 Note — Progress indicator: Progress is measured by the number of files completed. Policy models often include only a few very large files, so the progress bar may remain unchanged for a while.

INFO

Policy Path Example

/root/ros2_ws/src/physical_ai_tools/lerobot/outputs/train/

└── example_model_folder/

├── pretrained_model/ # ← This folder contains config.json, model.safetensors, train_config.json

│ ├── config.json

│ ├── model.safetensors

│ └── train_config.json

└── training_state/

├── optimizer_param_groups.json

├── optimizer_state.safetensors

├── rng_state.safetensors

└── training_step.jsonFor a model folder structure like the one above, the Policy Path would be:

/root/ros2_ws/src/physical_ai_tools/lerobot/outputs/train/example_model_folder/pretrained_model/

INFO

Recording during inference will be supported in a future update. Coming soon!

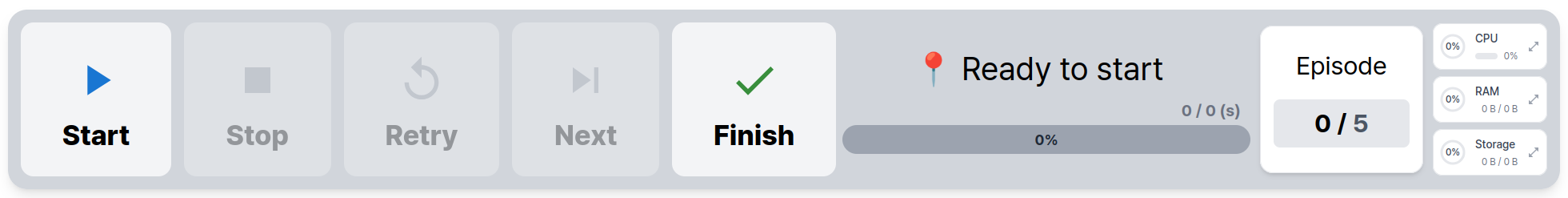

d. Start Inference

To begin inference, use the Control Panel located at the bottom of the page:

- The

Startbutton begins inference. - The

Finishbutton stops inference.